AI

Oct 04, 2023

Enterprise AI/ML roadmap: Charting the path towards a data-driven culture

Artificial intelligence (AI) and machine learning (ML) have captured global attention in recent years, with companies racing to adopt these technologies and ramping up budgets to fully leverage them. Companies quickly discover, however, that realizing the value of these capabilities is a bit more complicated than applying the latest, buzz-worthy ML model to their niche dataset. Without a defined roadmap to build towards AI/ML maturity and a team culture built around data best practices, successfully deploying even the most basic ML models to production can be a difficult milestone to reach.

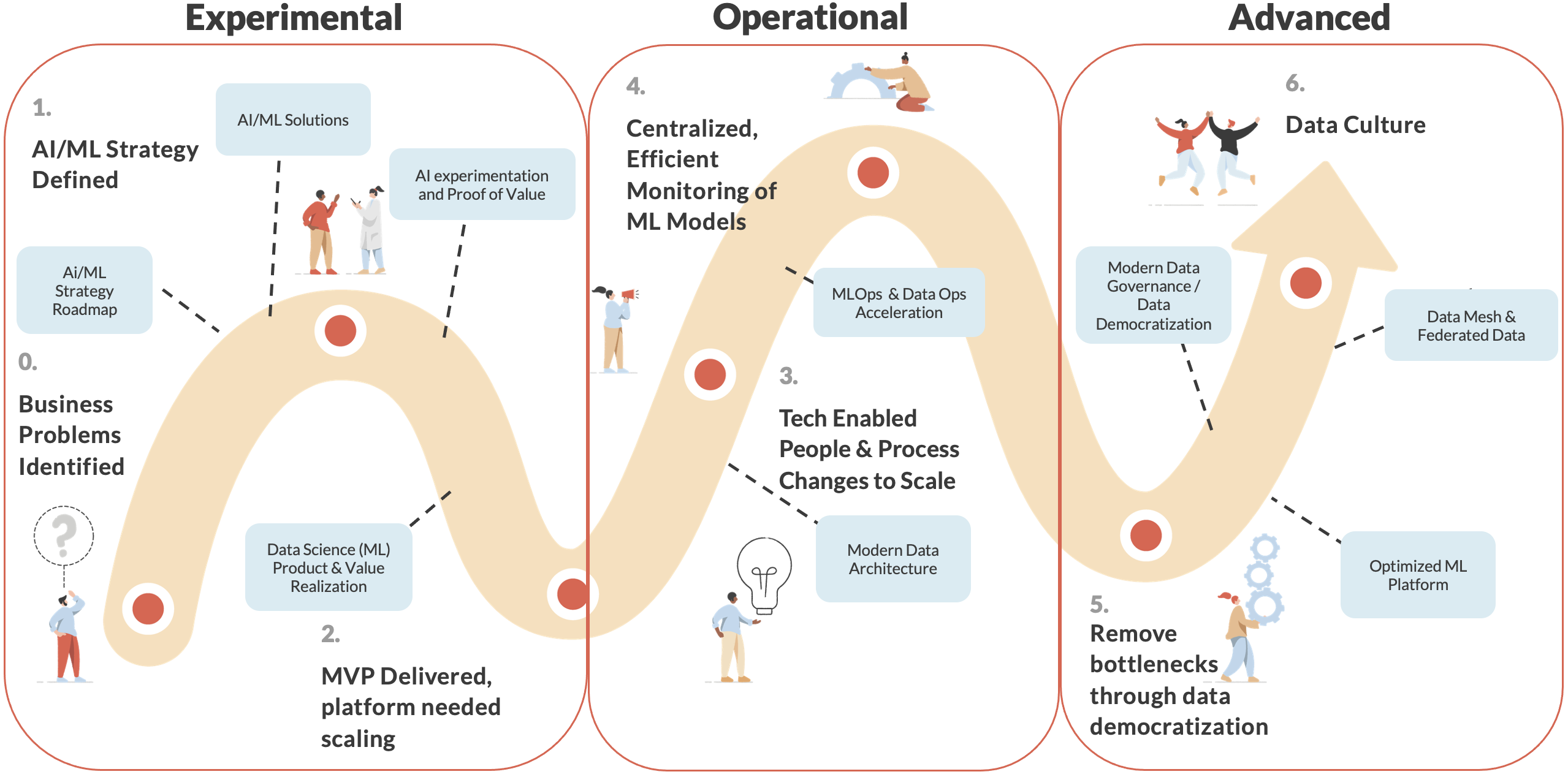

Figure 1: 3-Stage enterprise AI/ML roadmap.

In this blog post, we will distill Credera’s approach to an AI/ML roadmap into three stages and illustrate what organizations look like at each level of maturity. This unified roadmap is industry-agnostic and can apply to organizations of all sizes, whether you are a company considering these capabilities for the first time or are a more established enterprise that has been deploying models for years.

The three distinct stages are experimental, operational, and advanced:

Experimental stage: The organization has initiated AI/ML experiments but has yet to fully integrate data products into their core business processes.

Operational stage: AI/ML has been integrated into essential business processes to enhance decision-making and well-defined pipelines exist to deploy data products, although these are largely manual efforts.

Advanced stage: The organization is surrounded by strong software infrastructure and a data-driven culture focusing on continuous automation and innovation.

Let’s dive deeper into each of these stages.

1. Experimental

Organizations at this stage have just begun harnessing the potential of AI/ML in their business. While they may have built some useful models, the case is still being built to justify ML workflows in the business. Significant amounts of time and research will be conducted here to understand which aspects of the business can benefit from automation and which specific models should be utilized. Teams are still discovering how AI/ML can help their business succeed in ways that previous methods could not.

Aligning with business goals

Management plays a critical role in the experimental stage by defining the strategic goals that leadership aims to achieve. What critical business processes would benefit from highly accurate predictions? Which workflows could be sped up by algorithms handling repetitive, time-consuming tasks? What are some product features that could be personalized to better serve customers? While it may be tempting to jump right in and start investing heavily into tackling the company’s biggest problems, first assessing maturity in areas that present near-term AI/ML opportunities is much more valuable. At this early stage, it is important to validate the business impact for genuine, long-term acceleration.

Model experimentation

This discovery phase may take time but with the right plan and guardrails in place, it will outline the big picture while developers begin experimentation on proof of concepts (POCs). While models such as XGBoost and large language models (LLMs) often garner the most attention, it is the underlying data they are built on that allows them to excel.

Therefore, developer teams should make sure to assess the viability of existing and potential data resources. Datasets can vary widely in quality and relevance, so a thorough investigation will ultimately pay dividends during downstream model development. Finally, a specialized team of data scientists and subject matter experts (SMEs) will be required to oversee these initiatives. With the right data and team in place, organizations can carry out POCs to assess the practicality of incorporating AI/ML effectively.

Dive in: Credera client examples

When working with one organization that was building their first ML platform, Credera utilized the above steps to kickstart their journey. The client was attempting to build a recommender system for nonprofits to identify potential donors and while they were new to ML modeling, they possessed a wealth of data ready to use for their platform. Credera helped to further enrich these data sources and connect them to the right team of data and domain experts to unlock valuable insights for their customers.

Credera also collaborated with a luxury hospitality client’s product design team to bring a recommendation engine to life on their website. Previously, customers had a difficult time searching through a long list of available properties. To improve this experience, Credera outlined the data and software engineering approach, and helped develop a minimum viable product (MVP) recommendation engine. The ML model ingested user preferences and real-time behaviors to serve tailored properties, which in turn increased retention across subscribers.

2. Operational

Organizations at the operational stage have well-defined ML pipelines and governance frameworks for developing and deploying ML models that are fully integrated into their essential business operations. These organizations are also starting to evaluate effective mechanisms for monitoring their ML models in production environments, in efforts to diminish the risk of these models becoming stale over time due to data drift.

Integration of tools and techniques used to scale

After getting a few deployments under their belt, organizations quickly realize that models need to be managed and monitored. Open-source software like Kubernetes, Docker, and Apache Airflow can be used to deploy models to production environments. In addition, organizations can utilize the power of their cloud computing platforms like AWS SageMaker, Microsoft Azure ML, and GCP Vertex AI for deployment. These tools can also be utilized to monitor the quality of machine learning models in real-time, enabling organizations to set up automated alerts when there are deviations in the model quality due to data drift and anomalies. This allows organizations to scale their AI/ML capabilities efficiently, while also ensuring high-quality performance.

MLflow on the Databricks ML Runtime or Snowpark on Snowflake’s Cloud Data Warehouse can similarly accelerate growth by providing model management and monitoring capabilities. These platforms can help organizations rapidly streamline and scale their AI/ML capabilities.

Challenges faced in operations

Related to the topic of data drift, models often require updates after deployment to maintain accuracy. Therefore, ensuring their performance and scalability becomes increasingly challenging with time if done manually. It becomes clear that automated solutions for these challenges are not just luxuries but necessities. This stage of maturity for automating certain aspects of ML is often referred to as MLOps; MLOps represents a set of practices that aim to automate ML model deployment and monitoring.

For example, with Amazon SageMaker a team member can set up continuous monitoring to track the quality of ML models in production. It will notify the team when there are deviations in prediction quality, which enable proactive corrective actions such as retraining models or auditing upstream pipelines, avoiding the need to monitor models manually or build additional tooling.

Another common challenge with ML models is interpretability, a lack of which can make it difficult for stakeholders to trust model outputs. It is important to take this into consideration when selecting a model type. Simpler methods such as linear regression are often a good choice for this exact reason. Performing feature importance analysis and generating corresponding visuals can address this challenge for more complex model types.

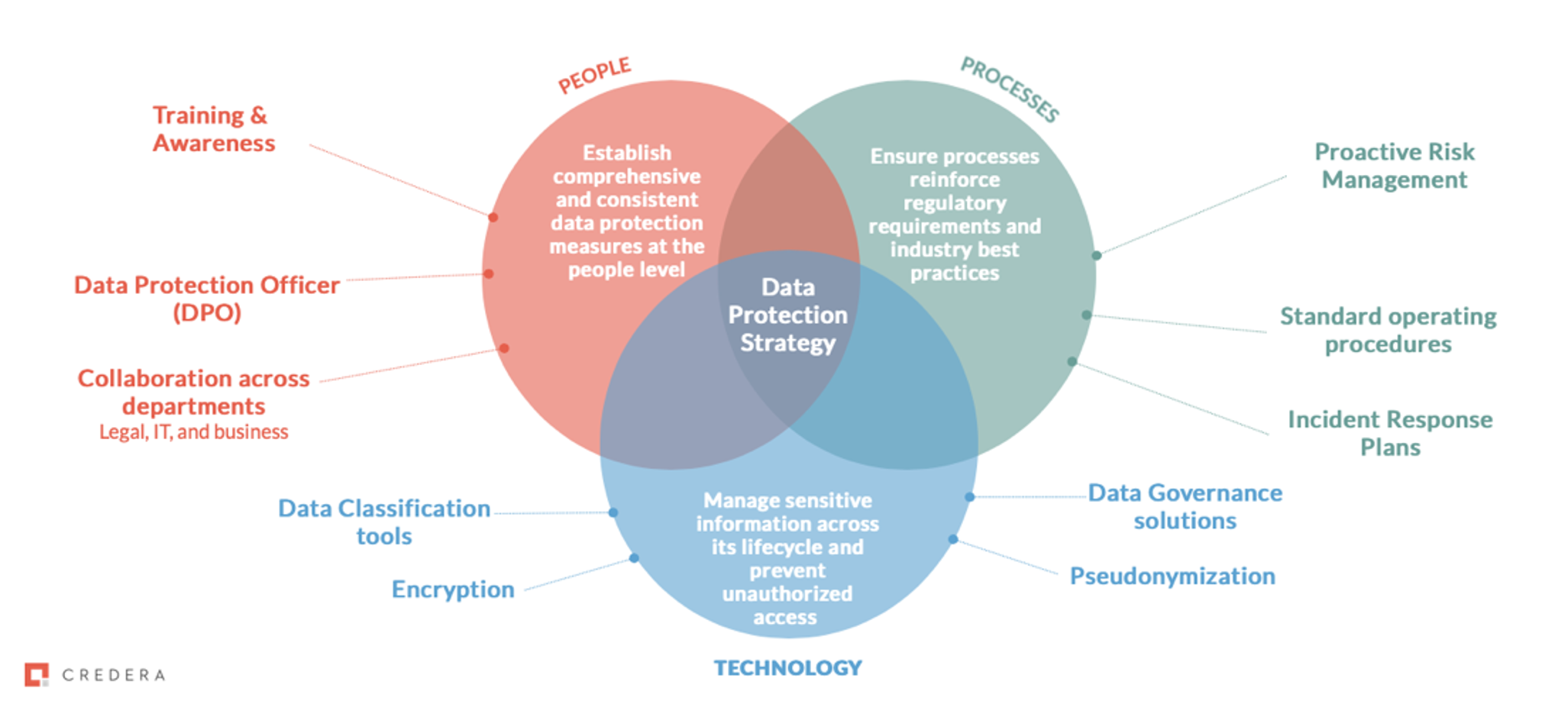

Finally, organizations at the operational stage often deal with sensitive data, which can pose security and compliance challenges. Organizations must ensure that their models are secure and compliant with relevant regulations. Some of the measures organizations can take include protecting sensitive data using encryption or access control. Make sure that during model training sensitive data is not leaked. Processes like anonymization, data masking, and de-identification can protect against this. Also, consider using secure data centers or private clouds for model deployment with restricted network access.

Figure 2: Data protection strategy.

Diving in: Credera client examples

A Credera team recently worked with a leading integration platform as a service (iPaaS) provider to standardize their MLOps workflow. While they had models in production, there was a lack of standardized best practices for development, spanning from model experimentation to deployment.

The challenges encountered in maintaining and iterating on models were remediated by enabling automated procedures for retraining and deploying models with no downtime. Additionally, project templates and guidelines were created to enable them to move models to production quickly and efficiently.

For one of the world’s leading biopharma companies, Credera architected and built an MVP platform for analyzing unstructured data using AWS SageMaker and state-of-the-art natural language processing (NLP) techniques. The client had already developed a strong, compliant platform for analyzing structured data and was using AWS SageMaker to enable the scaling and management of the models.

However, many of the insights needed to optimize the business were locked in the unstructured data. Credera helped implement the MVP platform, enabling key medical and commercial use cases in three months, while also building a long-term roadmap to fully realize the platform vision. The implementation rapidly delivered value to the business by enabling a deeper understanding of the voice of the customer.

3. Advanced

As organizations progress toward the advanced stage of the AI/ML roadmap, they have established a solid foundation that prioritizes data quality, governance, and management. At this stage, the ultimate objective is to automate the entire ML pipeline by implementing MLOps and DataOps methodologies.

Advanced stage enterprise AI/ML goals

AI/ML has the potential to transform how companies operate by automating processes, improving decision-making, and optimizing resources. At the advanced stage, organizations can identify a business use case and then rapidly develop and deploy an ML model to realize the value.

These mature enterprises can generate new revenue streams by creating innovative products or services that leverage AI/ML, for example using cutting-edge technologies like LLMs. Importantly, not only do they have these advanced offerings, but they also have an efficient process to take a data product from inception to delivery. By reducing the time needed to deploy these products, businesses realize more value from their AI/ML investments and foster a data-driven culture internally.

Tools and techniques that empower complex analytics

At the advanced stage, enterprises have the ability to employ complex models such as natural language processing, computer vision, and reinforcement learning to tackle challenging use cases. These algorithms require specialized knowledge and enable businesses to develop more sophisticated products that can handle unstructured data, generate highly accurate forecasts, and adapt to changing environments. With their data volume growing, advanced organizations may employ big data platforms like Redshift, Databricks, Kafka, and Cassandra for storage and processing power.

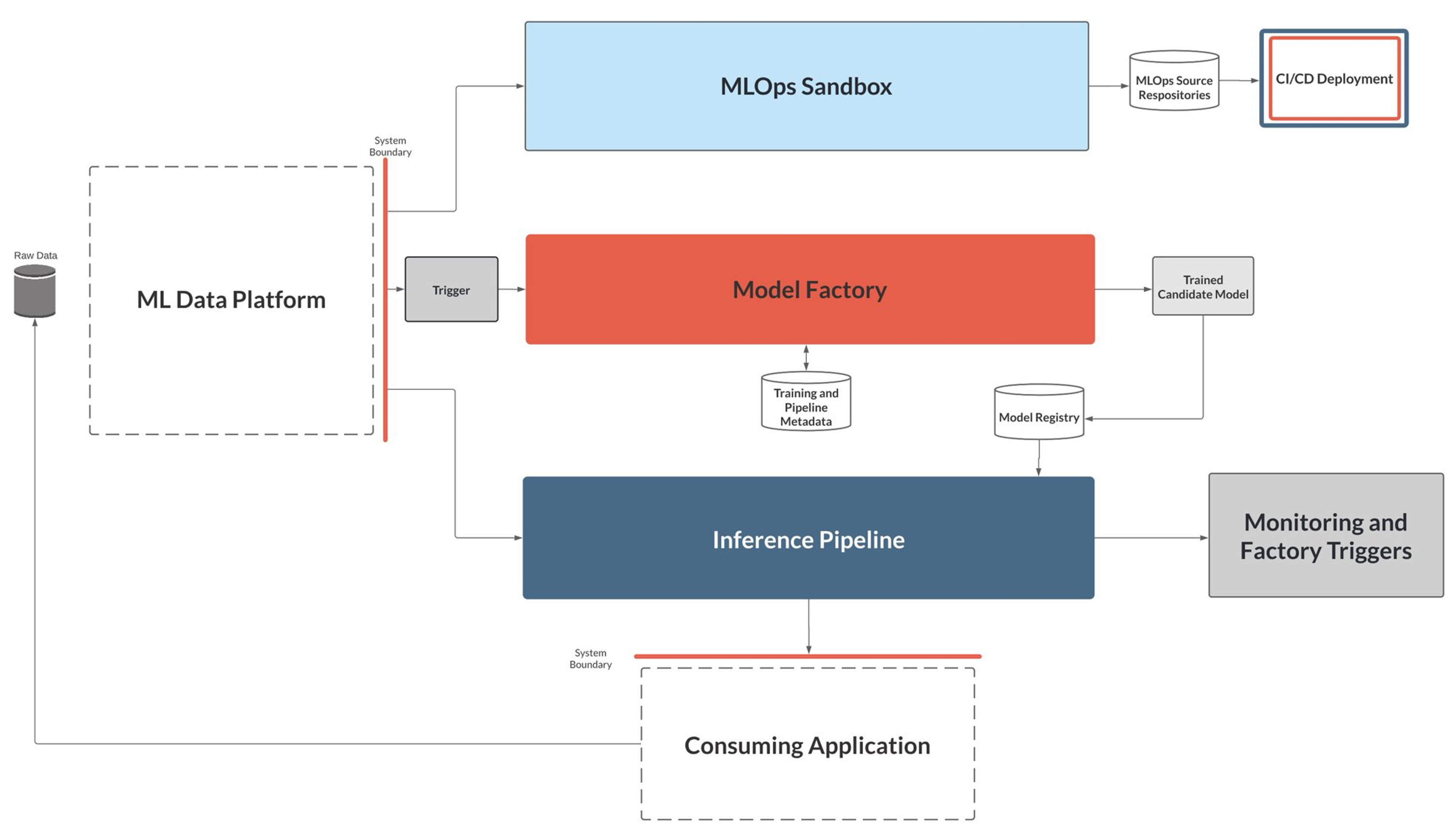

As systems build in complexity, it becomes imperative for software automation to play a bigger role in managing workflows. Continuous integration/continuous deployment (CI/CD) is a critical approach that decreases development complexity by streamlining entire AI/ML workflows through built-in automation. The end goal is to automate the entire model development process, from data preparation to model training to deployment and monitoring using MLOps tools, an approach commonly referred to as the model factory. This endeavor is supported by DataOps, a set of technical practices enabling seamless data flow from producers to consumers, and a data marketplace, which provides a platform for sharing and monetizing data.

Figure 3: Credera’s MLOps reference architecture.

Dive in: Credera client example

An invoice factoring company, dissatisfied with their existing document classification and information extraction software, underwent a comprehensive analysis of their processes and business objectives with Credera. Following a discovery phase, Credera recommended using optical character recognition (OCR) to address their challenges. Through a working POC, the joint team demonstrated real-time insights and workload automation, leading to the development of a production-ready platform. This custom platform empowered the business to deploy AI/ML solutions enterprise-wide, improved data sources, and optimized their business processes, resulting in enhanced efficiency and a competitive advantage.

Credera also assisted a $30 billion energy and utilities provider with data discovery issues, including limited knowledge sharing, poor understanding of data, and time-consuming searches. The team developed personas for data users, created journey maps, and built a customized data marketplace with a user-friendly interface. This enhanced user experience allowed executives to quickly find and utilize data products to inform decision-making. The marketplace also provided information about data owners and source owners, building trust with the users.

Another initiative at the same energy provider involved the migration of code to accommodate for the scalability of modern data pipelines and teams. With their data systems growing in complexity, it became necessary for the client’s codebase to evolve in tandem with more advanced technologies to better cater to their customers. Additionally, with their number of data teams growing and developing redundant yet slightly different ML practices, development environments became siloed, hindering reusability and collaboration. Credera helped facilitate a code migration for the client, which consolidated the teams onto a single stack to standardize their ML workflow from experimentation to deployment.

Moving forward on the AI/ML roadmap

Recent advances have given organizations ample reason to invest in AI/ML tools to meet and exceed their business goals. Some have already invested heavily and are well on their way along the journey, while others have yet to fully dive in. By outlining these three stages of maturity on the AI/ML roadmap, we hope that a clearer path with defined milestones has been laid out for organizations to follow.

We believe following a strategic, long-term roadmap is critical for organizations to progress from isolated experimentation to full integration of AI/ML and establishment of a data-driven culture. If you are looking to ramp up your own enterprise’s AI/ML journey, reach out to us at findoutmore@credera.com.

Contact Us

Ready to achieve your vision? We're here to help.

We'd love to start a conversation. Fill out the form and we'll connect you with the right person.

Searching for a new career?

View job openings